We are in the midst of a modern mental health crisis, an epidemic increasingly recognized but far from resolved. Gen Z has rallied around openness as a solution, calling on peers to talk more, share more, and destigmatize mental health struggles. This cultural shift has driven an unprecedented rise in the demand for mental health services, particularly psychotherapy, among young adults. Approximately 12.7% of young adults report unmet mental health needs (a 2.5x increase from 10 years ago). In a country with over a million lawyers and 200,000 therapists, demand has rapidly outstripped supply.

As is the case for most skilled professional services, scarcity of labor contributes to two failure points: steep financial barriers and excessively long wait times to see an experienced therapist. Nearly 25% of American adults experiencing frequent mental distress did not seek care because of cost barriers. Even when access is financially in-reach, therapists themselves may not be. Rural residents in the U.S. travel an average of 26 minutes to reach any mental healthcare facility.

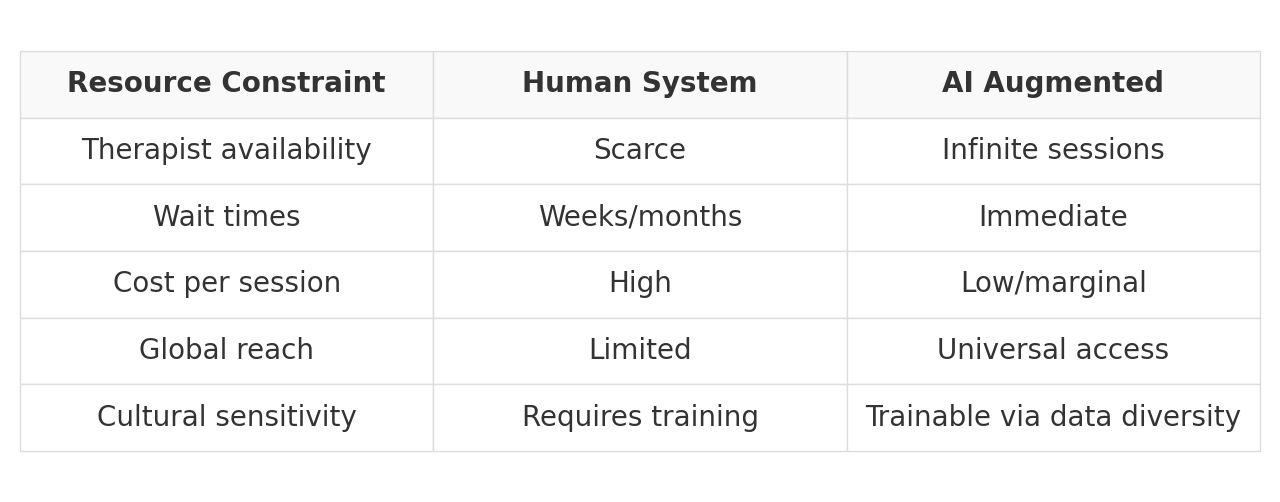

AI, theoretically, inverts this model. Infinitely scalable and personalized AI therapy allows anyone with internet access to receive “treatment”. These platforms, delivered by simple mobile apps, operate continuously, delivering cognitive interventions and offering judgment-free interaction as they support users through emotional distress. By decoupling care from human labor, these systems introduce an incredible level of accessibility and immediacy.

AI therapy generally falls into three categories:

Chatbot Therapists: Text or voice-based AI (e.g. Woebot, Wysa) simulate therapeutic conversations and guide users through CBT-based coping strategies for anxiety and depression.

Virtual Avatar Therapists: AI-powered avatars (e.g. Replika, Ellie) that respond to vocal tone, facial expressions, and user cues, creating more interactive, human-like therapy experiences.

Emotion-Sensing AI: Systems that analyze vocal, facial, and behavioral signals to detect emotional states in real time (e.g. Beyond Verbal, openSMILE), enabling personalized, adaptive support.

And they seem to be working, sort of… Recent findings support their efficacy on a grand scale. In a recent clinical trial, Therabot decreased symptoms of depression by 51% after 8 weeks. Within 2 weeks, Youper reduced anxiety symptoms by 43%. To put this in perspective, these outcomes match or exceed many FDA-approved pharmaceutical interventions for the same conditions.

The Cost of Unlimited Access

But, in fixing the scarcity of “care”, we may be engineering an entirely new set of risks at a scale we have never faced before.

We are already seeing these adverse consequences:

The creation of new mental health symptoms

The intensification of existing disordered thought patterns

Dehumanization: An increasing addiction/preference to “virtual reality”

In the first two instances, we’re seeing AI exacerbate the sickness by preying on the symptoms. The sycophantic tendencies of these models reassure people of delusional or unfounded beliefs. Trained on sympathetic language patterns, AI produces the guise of comforting and supporting like a real person. Unlike humans, however, these models do not judge or tire, or hold any boundaries: extreme enablement.

We are already seeing this in individuals struggling with OCD (obsessive compulsive disorder). Plagued by ruminative thought cycles, people with OCD endlessly probe AI to alleviate anxiety, which produces a counter-productive result: obsession, anxiety, compulsion, relief, repeat. In a few highlighted cases, patients diagnosed with contamination OCD repeatedly questioned AI about the cleanliness of their hands, doorknobs, and steering wheel. Another patient with a fear of flying, ruthlessly pressed AI about the reliability of certain plane models, statistics on the number of plane deaths per year, and other safety minutiae. Whereas a mental health professional would intervene with exposure treatment, AI models were accomplices in the troublesome OCD cycle.

I argue there’s a more insidious trend at play, though: As we outsource our vulnerability to machines, we may start to find more interest in the machines than each other.

A recent CBS special highlighted individuals who converse with AI around-the-clock, outsourcing their companionship to a machine. Surprisingly, these individuals are neither single nor isolated from family and friends. They simply prefer to engage with a machine who serves as a near-constant “yes-man.” While the appeal of a partner who offers unconditional support is understandable, it actively subtracts necessary friction from the experience of emotional connection. It hollows the internal mechanisms that make human interaction meaningful in the first place.

This is the quiet machinery of dehumanization. It does not occur in grand, dystopian strokes. Rather, in the gradual acceptance of connection without vulnerability, interaction without intimacy.

A Collaborative Model

The same tribalist debate occurs every time a new technology emerges – is it good or is it bad? True progress does not live in these absolutes. Rather than solely advocate for AI therapists or a purely human workforce, we can pioneer a plan where self-discovery and awareness are at the epicenter.

A Human-AI Collaborative model would fuse human insight with machine intelligence to reach new exceptional depths of self-understanding. That is the panacea.

This means scaling therapy services methodically through AI treatment plans that essentially extend in-person care using AI. The collaborative model intertwines the strengths of machine learning to redefine the boundaries of care, allowing therapists to expand their bandwidth while addressing the growing demand for mental health support.

Between sessions, patients can journal or complete therapeutic exercises such as CBT worksheets and breathing practices. This allows them to receive immediate, evidence-based responses that reinforce coping strategies and offer a sense of being heard when live support is unavailable. These tools, built on clinically-researched psychotherapeutic frameworks help sustain progress and maintain patient engagement, often providing levels of consistency and availability that human therapists cannot match.

For therapists, AI is becoming a true clinical partner as it tracks emotional trends, flags risks, and offers a richer, real-time view of patient wellbeing. Acting as co-pilots, these systems draft notes, suggest treatments, and surface hidden issues. In doing so, it expands the boundaries of care and scales access in ways once unimaginable.

A three-way flow of information between AI systems, patients, and therapists ensures that all parties' needs are met. By creating a continuous flow of information between patients, therapists, and intelligent systems, it transforms mental health care from episodic check-ins to an ongoing and responsive relationship. Therapists stay attuned to their patients’ evolving needs, not just during sessions but in between them. And patients gain the reassurance that support is always within reach, without having to wait until the next appointment to be heard.

Priming Ourselves for Stronger Connections

Above all else, this joint model must be optimized for self-growth – the true aim of therapy in the first place. Imagine a therapeutic model where AI enables individuals to process their emotions more regularly and build self-awareness more rapidly so that when they do sit down with a therapist, they are able to show up with more clarity and presence. At its core, therapy is an instrument for growth. It allows us to excavate years of buried pain and misunderstanding, turning past wounds into a revitalized inner clarity.

This work is not easy. Deep personal growth is often gritty and exhausting. Any technology that we integrate into that sacred process must be in service of that end. Instead of falling subject to the Either/or fallacy (human or machine), we should pursue a path that acknowledges the real risks of both therapeutic methods and capitalizes on their strengths.

Ultimately, connection remains the essence of our existence. We must pursue any therapeutic path that deepens our self-understanding and strengthens our bonds with others. The measure of any therapeutic intervention lies in its capacity to foster human flourishing. Let that be our guiding principle.